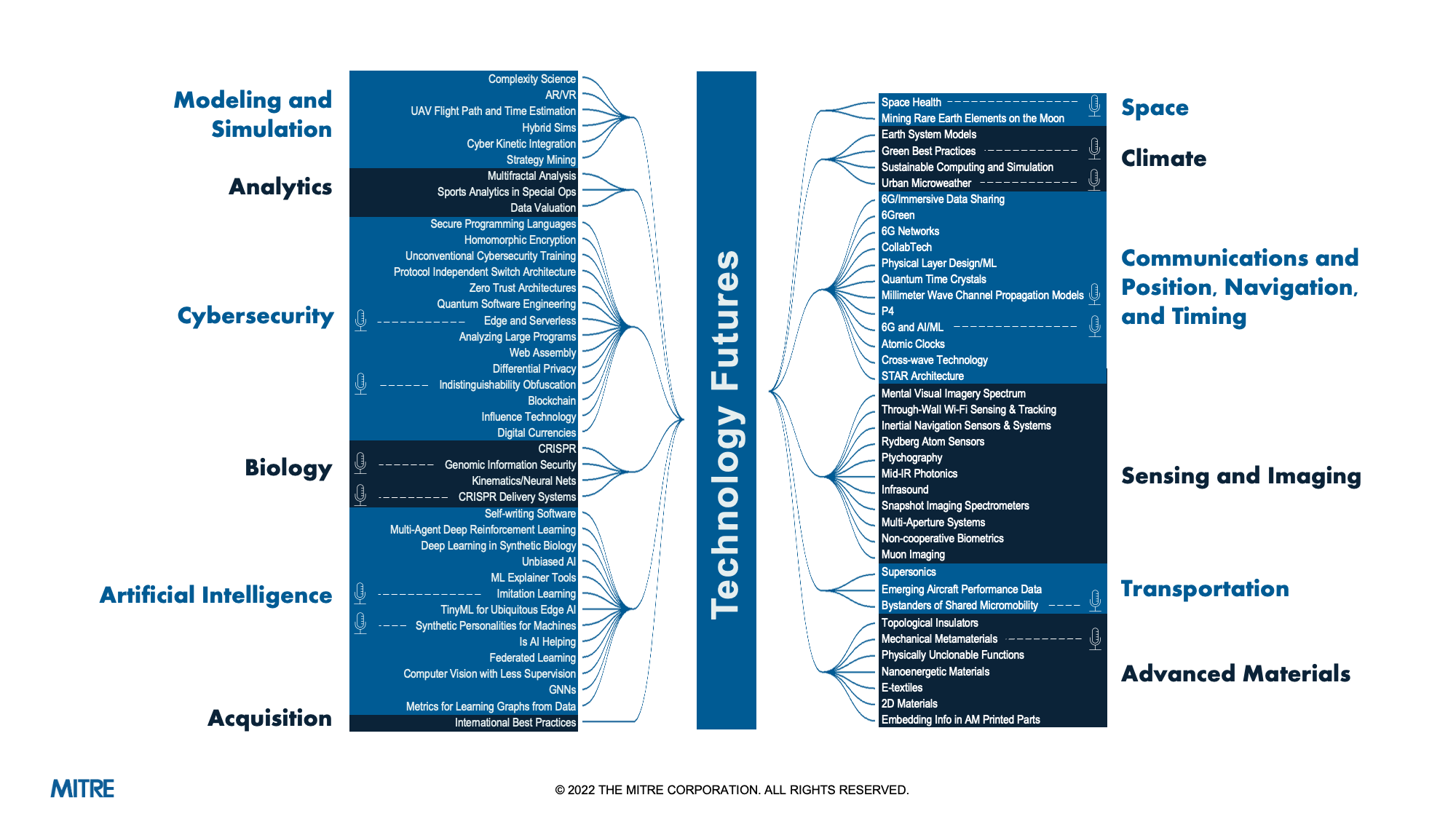

Research

Note

This page contains the abstracts of many of our Tech Futures research reports. To access full reports and executive summaries, please contact rosfjord@mitre.org or hfarris@mitre.org.

Acquisition

FY18

International Best Practices

Principal Investigators: Kelly Hough, Erin M. Schultz, and Virginia Wydler

Abstract

U.S. program offices need help implementing complex international acquisitions, as their missions expand into the global use of U.S. defense weapons. This research documents best practices that will allow federal program managers to implement international acquisition strategies that fit their situation on the acquisition lifecycle spectrum. The research reflects a comparison of international acquisition best practices by U.S. agencies, foreign entities, and commercial industry for military systems and space exploration that program managers can adopt to advance international acquisition strategies.

Advanced Materials

FY21

Mechanical Metmaterials

Principal Investigators: Casey Corrado, David A. Hurley, Ph.D. and Dong-Jin Shim, Ph.D.

Abstract

Metamaterials are an emerging class of materials that use engineered internal geometries at small length scales to produce bulk material properties not found in nature. Mechanical metamaterials are a subcategory of metamaterials focused on mechanical properties. Three focus areas were selected for an in-depth review: thermal, shock and impact, and acoustics and vibration. For each subsection, the metamaterial mechanism, capabilities, readiness, and potential applications are discussed. The Technology Readiness Level (TRL) of the various mechanical metamaterial focus areas is assessed and potential applications are identified.

FY18

Critical Analysis of Graphene Research and Applications

Principal Investigators: W. Jody Mandeville, Ph.D. and Geoff Warner, Ph.D.

Abstract

We review the state of the art of graphene applications, which is of interest to MITRE’s sponsors. Graphene is a novel material that exhibits a myriad of exceptional physical characteristics, including tensile strength, elasticity, electronic carrier mobility, optical absorption, and impermeability. These properties, individually or in combination, make graphene particularly promising for use in a broad variety of applications. Recent advancements in the fabrication of high-quality and large-area graphene are opening a variety of new potential uses. Applications discussed in this report are optical modulation, photodetection, electromagnetic shielding, and terahertz generation and detection.

Analytics

FY20

A Review of Data Valuation Approaches in Industry and Government

Principal Investigators: Mike Fleckenstein and Ali Obaidi

Abstract

The importance of data as an asset in both the private and public sectors has systematically increased, and organizations are striving to manage it as an asset. However, this remains a challenge, as data is an intangible asset. Today there is no standard to measure the value of data. Different approaches include market-based valuation, economic models, and applying dimensions to data and context. This paper examines these approaches and their benefits and limitations.

FY19

Leveraging Advances in Spatial Temporal Analytics for Use in Mission Performance Analysis

Principal Investigators: Brian W. Colder, Ph.D., Kevin R. Gemp and Anthony C. Santago II, Ph.D.

Abstract

In the sports industry, recent advances in tracking technologies have initiated a revolution in player evaluation by accurately recording player and ball movement in space and time throughout an entire game or match. Many of these techniques are applicable to any activity involving free-flowing movement that exhibits patterns and formations among teams of people performing a coordinated activity such as warfighters or law enforcement officials. This report provides an overview of the development of spatial-temporal analytics in sports, the tracking technologies used to collect the data, and implications and use cases for extending these methods more broadly.

Artificial Intelligence

FY21

Imitation Learning

Principal Investigator: Amanda Vu

Abstract

Imitation learning, or learning by example, is an intuitive way to teach new behaviors to an autonomous system. In imitation learning, an expert demonstrates a new behavior to the robot; in turn, the robot learns the target task by observing and imitating the expert. This paradigm of “learning from demonstration” offers a compelling alternative to current manually engineered methods, which often require extensive mathematical modeling and domain knowledge. This work provides an introduction to imitation learning, covering both theoretical aspects of imitation learning (e.g., key algorithms in the field, mathematical foundations), as well as practical aspects of setting up an imitation learning system (e.g., algorithmic and experiment design decisions for the practitioner, experimental studies). This white paper also includes an introduction to the open-source library, ilpyt, an imitation learning toolbox built at MITRE. The toolbox, which contains modular implementations of common deep imitation learning algorithms in PyTorch, can accelerate and bootstrap research in the imitation learning field.

FY21

Synthetic Personalities for Machines

Principal Investigators: Sara Beth Elson, Ozgur Eris, Natalie Friedman, Sarah Howell, and Jeff Stanley

Abstract

This study presents a survey of psychology research on models of human personality and explores their applicability to building distinctive and useful traits into machines. It also presents a survey of human-machine interaction research and analyzes how humanlike characteristics and behaviors have been implemented into machines, including robots, digital assistants, decision support systems, and vehicles. These two parallel lines of inquiry enable the identification of principles and a systematic process for conceptualizing and expressing personality when designing machines.

FY20

TinyML for Ubiquitous Edge AI

Principal Investigator: Stanislava Soro

Abstract

TinyML is a fast-growing multidisciplinary field at the intersection of machine learning, hardware, and software, that enables running sensor data analytics on embedded (microcontroller-powered) devices at extremely low-power range (mW range and below). TinyML supports the development of power-efficient and compact machine learning and neural network models specifically customized for battery-operated, resource-constrained devices. In this report, we discuss the major challenges and technological enablers that direct this field’s expansion. TinyML will open the door to the new types of edge services and applications that do not rely on cloud processing but thrive on edge inference and autonomous reasoning.

FY18

Deep Learning-Enhanced Biology

Principal Investigators: Christopher D. Garay, Ph.D., Tonia M. Korves, Ph.D. and Matthew W. Peterson, Ph.D.

Abstract

Deep learning has resulted in great advances in fields such as computer vision and language translation. These methods are increasingly being applied to DNA, RNA, and protein data. When combined with biotechnology advances in gene-editing and laboratory robotics, these methods could create enhanced biological capabilities. This report describes applications of deep learning to biomolecular data, and the impact this is having on bioinformatic capabilities and practical applications. We identified seven bioinformatic capabilities that deep learning is being applied to: predicting transcription and translation, predicting small molecule and protein binding, predicting gene and protein function, DNA variant calling, selecting CRISPR guide sequences, generating novel functional DNA and protein sequences, and predicting complex traits from genotypes. We summarize current technical trends, challenges in applying deep learning to biomolecular data, and potential future directions.

FY18

Unbiased AI

Principal Investigators: Ellen Badgley, James Morris-King and Jason Veneman

Abstract

This document provides an overview of the latest state of the discourse over bias and fairness in artificial intelligence, from the perspective of public-facing government agencies. We summarize current case studies; explore the problem of defining “algorithmic fairness,” especially in the context of machine learning systems; and survey up-and-coming recommendations for eliciting and establishing fairness standards among agencies and the public. We also explore a number of specific technical solutions that, once fairness standards have been identified, might be used to detect and ameliorate bias in machine learning systems. We follow with a discussion of particular sponsors and domains for which algorithmic fairness issues are critical and timely, and conclude with a series of recommendations to provide assistance and guidance.

Biology

FY21

Delivery Systems for CRISPR Technology

Principal Investigator: Heath Farris, Ph.D.

Abstract

The delivery of gene therapy mechanisms to their targeted cells within the human body has long been a goal and promise of modern medicine. As prospects of genome editing technologies have emerged within the field of molecular biology, one of the major limitations to realizing their full application potential as therapeutics has been the control of their placement within the targeted tissues of the body. The control of editing tools has far exceeded the ability to deliver the editing machinery to the target cells within the biological system. In its brief history, CRISPR technology has facilitated the discovery of many new mechanisms and principles in biological sciences. The precision and simplicity of the technique has sparked interest for its potential applications to cure diseases and to control the biological world at the molecular level; however, old challenges that have hindered the genome editing predecessors of CRISPR limit the application of this technology as well. Delivery systems for placing CRISPR components into the proper position to be effective are currently deficient, creating a demand for new options. Innovations within biological sciences and other fields are being exploited to create new modalities for delivery. This technical report explores the current state of emerging in vivo delivery systems for application with CRISPR technology. The emerging systems for delivering CRISPR components to targeted cells and tissues are characterized with emphasis on viral vectors, lipid systems, nanoparticles, extracellular vesicles, chemical systems, physical systems, and hybrid systems. Each system is viewed from its ability to deliver the CRISPR components to their respective target tissues in vivo.

FY18

An Exploratory Study on Interfacing the Simulation Training Exercise Platform (STEP) with Operational Simulators

Principal Investigators: Douglas Flournoy and Edward Y. Hua, Ph.D.

Abstract

This report introduces the Simulation Training Exercise Platform (STEP) technology. One of its core capabilities is mapping the effect-impact relationship between the cyber and operational domains within a simulation environment. With this technology we aim to address the problem of understanding precisely how effects in one domain could propagate to the other and manifest into certain impact in a complex simulation environment. STEP could bring benefits to a broad range of sponsors, both military and civilian, who are entrusted with mission-critical responsibilities through analyzing simulation data. We also identify three technical hurdles that could affect sponsor acceptance of STEP and lay out a transition path to the relevant sponsors.

Climate

FY21

Green Best Practices

Principal Investigators: Emily Holt and Casey Corrado

Abstract

While it is commonly accepted that Climate Change needs to be addressed to protect both human and environmental health, it is not widely understood what steps need to be taken to accomplish this daunting task. Additionally, there is currently no formal definition of what constitutes a “green” company or “green” best practices, despite the exploding popularity of the term. We found that companies that are considered “green” have well-documented, quantifiable improvements in their sustainability plans and initiatives. Multi-year goals, with progress mapped from year to year, follow trends in the following areas: reduction in carbon emissions, energy obtained through renewable energy sources, amount of waste diverted from landfills, third-party certifications for buildings, water conservation, increasing “green” requirements from suppliers, and sustainable fleet management. In order to address the gap between industry and government practices, and to capitalize on recent interest and investment in “green,” we recommend that all U.S. government agencies formalize and publicly release sustainability policies with quantifiable goals, identified practices that will be implemented, and defined metrics to measure progress.

FY21

Urban Microweather

Principal Investigator: Mike Robinson

Abstract

It is anticipated that risks and impacts associated with both increased urbanization (continued development, buildup, and sprawl) and ongoing Climate Change will create additional, combinatory risks to city resiliency and sustainability. Comprehensive understanding of the implications and potential risks of Climate Change in dense urban areas requires closer examination of how the atmospheric environment within cities may be altered given model-estimated predictions. In fact, ideal assessments of how changes in the urban landscape and climate will affect the future health and prosperity of cities require “street-level” insights to understand the effect of perturbed, urban microscale weather on the “well-being” of the city’s inhabitants.

FY21

Sustainable Computing and Simulation

Principal Investigators: Suzanne M. DeLong, Christine E. Harvey, and Andreas Tolk

Abstract

Today’s computing contributes significantly to our carbon emissions, which are a pivotal concern for our environment. However, computing is also enabling the use of smart devices scaling up to the creation of smart cities, which potentially will lead to an improved quality of life. Our society needs to continue to find ways to keep computing sustainable and it needs to happen at a faster rate than the growth of computational needs. The authors conducted a literature survey to help identify ways to increase sustainable computing and how simulation and machine learning could play a part. The surveyed publications looked at (1) green computing challenges in production, procurement, and disposal, as well as green policy, regulations, and legislation, (2) how to find efficiencies through the use of distributed technologies, (3) methods to improve energy efficiency, and (4) areas where simulation and machine learning can be applied to continue the path of sustainable computing.

Communications and Position, Timing, and Navigation

FY21

6G and Artificial Intelligence & Machine Learning

Principal Investigators: DJ Shyy, Ph.D., Curtis Watson, Ph.D. and Kelvin Woods, Ph.D.

Abstract

As the fifth generation (5G) technology deployment rate increases and standards continue toward steady state, researchers have turned their attention toward 6G. New use cases and the potential of performance shortfalls have started the research buzz. Early efforts center on key fundamental research that will support target goals such as 1 Tbps peak data rate, 1 ms end-to-end latency, and up to 20-year battery life for this next generation of communication networks. To support this 6G research, international conferences have sprung up. Topics include THz communications, quantum communications, big data analytics, cell-free networks, and pervasive artificial intelligence (AI). In this paper we focus on pervasive AI, which has the potential to drastically shape the new 6G network. To achieve the faster rates and lower latency performance gains, an efficient network will be required. The network must dynamically allocate resources, change traffic flow, and process signals in an interference-rich environment. Pervasive AI is a leading candidate to accomplish these tasks. AI and machine learning (ML) will play an important role as an enabler of 6G technology by optimizing the networks and designing new waveforms.

FY21

A Comparison of Large-Scale Path Loss Models for XG mmWave Channels

Principal Investigators: Kevin Burke and Bindu Chandna, Ph.D.

Abstract

As communications technology advances beyond that deployed in 4G systems, a number of new concepts for 5G and 6G (collectively referred to as XG) systems are being explored. Among them are the use of millimeter wave (mmWave) radio frequencies and small, aerial platforms. To support the research, development and deployment of XG equipment, we need models of channels at frequencies not originally specified for use in earlier systems. In this report, we investigate and compare models proposed by different organizations for terrestrial and airborne applications with an emphasis on mmWave frequencies. Because of our interest in leveraging XG equipment in outdoor ISR scenarios, we concentrate on the urban macrocell (UMa) and rural macrocell (RMa) terrestrial environments, as well as the urban macrocell aerial vehicle (UMaAV) and rural macrocell aerial vehicle (RMa-AV) airborne environments.

FY21

Deploying P4 in Resource Constrained Environments

Principal Investigators: Ahri Akiyama and Carter B.F. Casey

Abstract

The P4 network programming language, a recent advancement in Software-Defined Networking, is growing in popularity but remains under-studied in the domain of realistic, resource-constrained networks. By analyzing the contributions P4 brings to Software-Defined , and examining both existing and future use cases, we demonstrate the potential these capabilities have for improving network utilization, protocol interoperability, and cost of deployment for resource-constrained environments. As this technology matures, we expect that real-world deployments of P4 will provide considerable benefits to resource-constrained networks.

FY21

Quantum Time Crystals

Principal Investigators: Jody Mandeville, Ph.D. and Geoffrey Warner, Ph.D.

Abstract

In recent years, many experimental groups have observed a new phenomenon called a “time crystal.” Time crystals are driven quantum systems exhibiting stable oscillations at integer multiples of the driving period. While the evidence for their existence appears indisputable, the interpretation of this physical state, and the potential for its application to problems of quantum computing, sensing, and metrology, is still unclear. In this Report, we describe the theoretical basis of the time crystal concept, review details of its various experimental realizations, and discuss potential applications and open questions.

FY19

Atomic Clocks

Principal Investigators: David R. Scherer and Bonnie L. Schmittberger

Abstract

Atomic clocks provide the accurate and precise time and frequency that enables many communications, synchronization, and navigation systems in modern life. GPS and other satellite navigation systems, voice and data telecommunications, and timestamping of financial transactions all rely on precise timing enabled by atomic clocks. This report provides a snapshot and outlook of atomic clocks and the applications they enable at the time of writing. We provide a concise summary of the performance and physics of operation of current and future atomic clock products, along with a mapping between types of clocks and potential applications. Additionally, we present examples of emerging clock technologies and prototype demonstrations, with a focus on technologies expected to provide commercial or military utility within the next decade.

Cybersecurity

FY21

Hidden in the Noise: Frontiers of Differential Privacy

Principal Investigator: Ken Smith, Ph.D.

Abstract

Privacy has presented a confoundingly hard problem for years. Strategies to preserve the privacy of personal information while offering useful derived products have been repeatedly compromised by attacks using correlation with external data and brute force computation. Stakeholders have wrestled with navigating the privacy/utility tradeoff. Differential Privacy (DP) provides a promising option and numerous advances: privacy is measurable at the personal level, DP is impervious to brute force and external correlation attacks, it clearly relates privacy to utility, and its solid mathematical foundation provides a framework that unexpectedly addresses other hard problems (e.g., robustness against adversarial examples in machine learning). DP is being widely studied in academia and is being pushed toward deployment in various venues (e.g., Google’s RAPPOR system). However, its clean mathematical model is dissimilar from many real-world use cases, raising issues and highlighting frontiers along the road to practical deployment. Tthis paper provides an introduction to the theory and practice of differential privacy, and also presents a sampling of existing “wins” illustrating the promise of DP.

FY21

Practical Implications of Indistinguishability Obfuscation

Principal Investigator: Moses D. Liskov, Ph.D.

Abstract

Indistinguishability obfuscation (iO) is a powerful theoretical cryptographic primitive that has attracted attention. Specifically, iO provides a way to obfuscate circuits in such a way that the obfuscated version can still be executed, but the obfuscated version obscures differences between equivalent circuits. Indistinguishability obfuscation has been called the “Crown Jewel of Cryptography.” This report investigates the practical significance of indistinguishability obfuscation with regard to important government sponsor problems. Our main conclusion is that while iO is an exciting and vigorous area of cryptologic research, it is extremely expensive and impractical for use now or in the near future. Furthermore, although it has been shown to be a powerful and versatile concept, applications that actually require iO tend to have tenuous relevance to real-world problems. Given their cost, it would be hard to justify any real investment in the area for less than a vastly transformative potential, which is not obvious at this time.

FY21

WebAssembly: Current and Future Applications

Principal Investigators: Justin Brunelle and David Bryson

Abstract

This report introduces WebAssembly (Wasm) technology, with a goal of educating potential users and motivating further exploration and use. To that end, we include an introduction to Wasm and how it works. A survey of the current state of the art highlights a broad spectrum of Wasm capabilities and its growing ecosystem of tools and support. It covers details of the Wasm security model and outlines important security considerations, as well as recommendations for further research. Finally, we provide several real-world examples of Wasm use in both client and server-side environments. Overall, due to Wasm’s open nature, broad industry support and use, and growing ecosystem of tools and language support, we envision Wasm as playing an increasingly important role in a wide range of applications.

FY21

Zero Trust Architectures: Are We There Yet?

Principal Investigators: Deirdre Doherty and Brian McKenney

Abstract

The movement towards Zero Trust Architectures (ZTA) aligns with cybersecurity modernization strategies and practices to deter and defend against dynamic threats, both inside and outside traditional enterprise perimeters. The “Executive Order on Improving the Nation’s Cybersecurity” from May 12, 2021, directs executive agencies to “develop a plan to implement Zero Trust Architecture.” The implementation of ZTA requires the integration of existing and new capabilities, as well as buy-in across the enterprise. Successful implementations will require multi-year planning that includes determination of drivers and use cases, policy development, architecture development, technology readiness assessment, pilots, user training, and phasing of deployments. This ZTA Tech Watcher report provides background, applicability, and benefits to organizations, as well as outstanding challenges and issues, and recommendations.

FY20

Analyzing Very Large Problems

Principal Investigator: Edward K. Walters, Ph.D.

Abstract

Program analyses, in which computer resources can determine if programs have undesirable properties or potential bugs, are a critical part of the software development process. However, they are difficult to scale upwards to many of the code sizes (e.g., millions) encountered in government and military projects. Our work examines recent research and current industry best practices in an attempt to look forward and identify new techniques for scaling these analyses upwards. We begin by providing a brief overview of program analysis methods, along with some sample analyses and a description of potential difficulties one can encounter when integrating these methods into a software engineering process. We summarize cutting-edge research that could improve the performance of a number of common analysis techniques, associating these improvements with practices at some leading software companies. We conclude with a set of recommendations for ways forward, including more reliance on composable and inexact analyses.

FY20

Edge and Serverless

Principal Investigators: Michael J. Vincent and Brinley Macnamara

Abstract

Edge and serverless computing have been hailed as emerging technologies with the potential to both reshape internet architectures and redefine the way internet services are developed and deployed. Edge computing is a model for internet architecture that brings compute closer to the network edge, where data is collected or consumed. Serverless computing is a paradigm for software development wherein application code is packaged into modular sub-components and deployed to platforms and infrastructure fully managed by a provider. Together, edge and serverless promise to provide a platform for real-time applications at scale – including self-driving cars, drone delivery services, and virtual and augmented reality experiences. However, the supporting infrastructure for edge computing at scale is far from fully deployed and serverless still requires complex software orchestration to realize applications. In this paper we define terms, review vendors, and discuss use cases. We present a rich set of recommendations for security and deployment backed by hands-on experience with the technology. Finally, we provide an overview of the current state of edge and serverless technology and outline our thoughts on these technologies’ promising outlook.

FY19

Blockchain for Decentralized Missions

Principal Investigators: David Bryson, Harvey Reed, and Gloria Serrao

Abstract

Blockchain is a disruptive technology that enables peer-to-peer decentralized sharing of transactional information across mission stakeholders and their enterprises. To better understand when blockchain is a good approach, this paper reviews blockchain technology, where it is being explored, emerging use cases, and analytic rationale for when blockchain should be considered. We aim to empower readers to begin exploration of blockchain in a substantive manner, starting with a study and/or prototype.

FY19

Comparison of Open Source Quantum Software Engineering Frameworks for Universal Quantum Computers

Principal Investigator: Joe Clapis

Abstract

Quantum computing technology has recently experienced massive growth across academic, government, and commercial domains. This growth is not only geared towards the development of physical quantum computers but also towards the software tools that will be used by engineers to program them. We explore several of the most prominent full-stack quantum frameworks, including Qiskit, QDK, Cirq, Forest, ProjectQ, and XACC, from a software engineering perspective to assess the strengths and weaknesses of each framework. Our assessment covers the gamut of software engineering processes, including learning curve, ease-of-use, included functionality, language features, community size, platform independence, simulator performance, supported use cases, and cost. Our findings represent a compiled review of our experiences working with each framework within these activities. They are meant to guide decisions on which quantum computing frameworks best suit one’s particular needs.

Modeling and Simulation

FY18

Hybrid Simulation

Principal Investigators: Saurabh Mittal Ph.D., Ernest H. Page, Ph.D. and Andreas Tolk, Ph.D.

Abstract

Simulation is well recognized as an engineering method in support of many domains of interest. Simulations can be discrete or continuous, they can use analytical models, and they can follow discrete-event, agent-based, or system-dynamics paradigms, depending on the requirements and the problem to be solved. Recently, the mix of such alternatives into so called hybrid simulations became popular. The Modeling & Simulation community has extended the definition of hybrid simulation, traditionally focused on the application of mixed discrete and continuous methods, to be more inclusive of recent advancements, such as multi-paradigm modeling. This report summarizes the main findings of a literature survey and the participation in expert panels and discussions on the topic, including academic collaborations on journal contributions. The report is intended to give an overview of the field including the state of the art, recognized research need, and contribution possibilities for future efforts. It also identifies the impact of the documented research on mission focused applications and addresses the identified hurdles, which are more conceptual than technical in nature.

Sensing and Imaging

FY21

Inertial Navigation Systems

Principal Investigators: Emily V. Bates and Joseph M. Kinast

Abstract

Inertial navigation systems (INS) use sensors such as accelerometers and gyroscopes to measure motional dynamics and estimate changes in position and velocity. By the mid-twentieth century, inertial navigation technology had matured to the point that INS could be installed in high-value platforms, such as submarines and commercial airliners. Since then, technological advances have driven a proliferation of INS that span an enormous range of size, weight, power, performance, and cost points. In recent decades, the explosive growth in application spaces relying on INS (such as smartphones, commercial and military drones, and autonomous vehicles), combined with growing concerns about the availability of global navigation satellite system signals, have driven renewed interest in understanding the capabilities and limitations of INS technology. This report provides a broad introduction to INS technologies and includes: an overview of basic principles, a discussion of various performance classes, and a summary of the current state of the art across those performance classes. We conclude by surveying emerging technologies that may have a disruptive impact on the current INS landscape.

FY21

The Mental Visual Imagery Spectrum

Principal Investigator: Claudia M. Froberg

Abstract

In 2015, the inability to voluntarily form mental visual images was labeled “aphantasia.” At the opposite end of the mental visual imagery spectrum is “hyperphantasia,” the ability to form extremely detailed mental visual images. Absent unique circumstances, an individual’s ability is fixed somewhere along the spectrum. Prior to mainstream media coverage of aphantasia, most people were unaware of the differences between their personal ability to mentally image and others’ experiences. Labeling and awareness of this spectrum of capability in individuals has reinvigorated the mental imagery research area. This spectrum is poised to become a useful demographic for segmenting human subjects research hypotheses and outcomes. This paper describes the state of the research area and proposes relevant future exploration and relationships for federal government agencies.

FY21

Through-wall Sensing and Tracking

Principal Investigator: Dennis F. Gardner, Ph.D.

Abstract

Radars with frequencies near WiFi (2.4 and 5.0 GHz) are used to detect and track people through walls. A research group from MIT is the only organization to date to demonstrate pose estimation (i.e., an estimate of the body’s skeletal configuration) from radar echoes. The key innovation is a cross-modal machine learning framework using both a camera and radar.

FY19

Muon Imaging: Remote Sensing with High Energy Particles

Principal Investigators: Geoffrey Warner, Ph.D. and Michael West, Ph.D.

Abstract

Muons are unstable, negatively charged elementary particles with a mean lifetime of 2.2 microseconds and a mass roughly 200 times that of the electron. They arise naturally as byproducts of collisions between primary cosmic rays and nuclei in the upper atmosphere, resulting in high-energy particle showers, some of which decay to muons with a flux at sea level of about 1 per square centimeter per minute. Because muons are so heavy and energetic, they can penetrate kilometers of solid rock before decaying, making them ideal candidates for passively imaging the interiors of otherwise inaccessible targets. Although this concept was first applied in the middle of the last century, it is only in recent years that the underlying technology has matured to the point that imaging systems of reasonable size, weight, and power have been developed. This technology has already been applied to such targets as geophysical features (e.g., volcanoes and underground caverns), and man-made structures, such as buildings, shipping containers, and vehicles. In this report, we investigate the capabilities and limitations of this technology, and review the current state of the art.

FY18

Commercial Snapshot Spectral Imaging

Principal Investigators: Chris Galvan, John Grossmann and Michael West, Ph.D.

Abstract

Advancements in snapshot multi- and hyper-spectral technology will soon make spectral imaging available to the consumer market. The first wave of products will be dominated by visible to near infrared (VNIR) cameras due to the maturity of Charged Coupled Device (CCD)/Complementary Metal Oxide Semiconductor (CMOS) imaging chip technology, which is used in everything from cell phone cameras to the Hubble Space Telescope. There is great potential for the law enforcement, warfighter, and Intelligence Communities to deploy compact spectral imaging on cell phones, dash cams, and small unmanned aerial vehicles. These uses of VNIR imaging spectroscopy go beyond the conventional ones, including agricultural and food safety applications that typically use VNIR spectroscopy. In this document we explore the “art of the possible” in VNIR imaging spectroscopy to enable material identification and characterization with low cost (i.e., non-scientific grade) commercial imagers.

Space

Projects coming soon!

Transportation

FY21

Bystanders of Shared Micromobility

Principal Investigator: Tracy L. Sanders, Ph.D.

Abstract

According to the U.S. Department of Transportation, micromobility is “a category of modes of transportation that includes very light, low-occupancy vehicles such as electric scooters (escooters), electric skateboards, shared bicycles, and electric pedal assisted bicycles (ebikes)” (Bureau of Transportation Statistics, 2020). According to Horace Dediu (see Joselow, 2020) is widely credited with originally coining the term micromobility and defined them as “everything that is not a car” that weighs less than 1,000 pounds. Over the last few years, shared micromobility has evolved considerably. Until recently, shared micromobility consisted of shared e-bikes in a few select cities. E-bikes fit well into the existing transportation system since there was already legislation pertaining to bicycles and e-bikes. People generally knew what to expect from bicycles: where they would ride, how fast they would go, and how they would behave. In 2018, the shared e-scooter market exploded (Bureau of Transportation Statistics, 2020). In many cities, officials were not informed of planned scooter deployments before they occurred, leading to confusion. Unfortunately, the existing bicycle and e-bike legislation was often not universally applicable to e-scooters. However, since that time, significant progress has been made and many areas now have robust legislation governing shared e-scooters. Through the publication of their findings, cities have shared their knowledge with others. This work analyzes and summarizes the findings from many cities, gained through published reports, websites, and discussions with planners, as well as research publications. The goal is to help planners and legislators who are integrating micromobility (mainly e-scooters) into their transportation infrastructures, with a special focus on the needs and responsibilities of bystanders (non-riders). The focus of this work is e-scooters since these popular devices require new rules and legislation in many areas. These devices affect the entire transportation infrastructure. The rise of micromobility has changed the conversation around transportation from car-centric to a multimodal perspective that considers the needs of all users. The impact was well-stated by Johnston (see Scholz, 2020), associate director of the Center for the Comparative Study for Metropolitan Growth, who said “Not since the arrival of bikes and automobiles have we experienced such rapid change”. As research on shared e-scooters is still limited (Yanocha, & Allan, 2019; Shaheen & Cohen, 2019), this paper provides general information and resources about e-scooters.